Openstack + Synology ISCSI Storage

I recently walked into my server/laundry room and was greeted by a full array of red blinking lights on my 15yr old Drobo. It served me well but gave up the ghost and needed to be replaced. Thank goodness for backups!

The Drobo was a no frills. By no frills I mean it had no Ethernet ports. It’s only connections were firewire and USB 2. When connected to an old laptop it got the job done.

I ordered a Synology DS418 and was back up and running quickly. The DS418 is not the top of the line but I was pleasantly surprised with it’s capabilities. I had been meaning to rebuild my OpenStack cluster and was happy to see the SynoISCSIDriver which allows a Synology NAS to be used for Cinder block storage.

First things first you will need to create an ISCSI volume on your NAS. I already had an SHR storage pool with a btrfs volume on it for NFS and SMB connections.

Open Storage Manager and Click Volume > Custom > Choose Existing Storage Pool > Btrfs. Enter a description (Openstack) if you would like and allocate storage. Click apply and you are all set.

You should now be able to SSH into the NAS and see your ISCSI volume listed.

stevex0r@heartofgold:/$ ls /volume2/

@iSCSI @tmpSSH into Openstack and open the Cinder configuration file located at /etc/cinder/cinder.conf.

Add the name of your Synology NAS to the enabled backends. In this case my NAS is called heartofgold. I already had lvm storage enabled on my OpenStack installation and decided keep it as an option.

enabled_backends=heartofgold,lvmYou can set the Synology as the default storage option if you would like.

default_volume_type=heartofgoldAt the bottom of your cinder.conf add a section to configure your NAS. I had some trouble with this until I added the volume_backend_name. It is listed as an optional setting in the official documentation. I found that it didn’t work properly with out it.

[heartofgold]

volume_driver=cinder.volume.drivers.synology.synology_iscsi.SynoISCSIDriver

volume_backend_name=heartofgold

target_protocol=iscsi

target_ip_address=192.168.1.129

synology_admin_port=5000

synology_username=stevex0r

synology_password=***

synology_pool_name = volume2Restart the cinder volume service.

$ service openstack-cinder-volume restartCheck you cinder services and ensure that your NAS is listed and healthy.

[root@firefly ~(keystone_admin)]# cinder-manage service list

Binary Host Zone Status State Updated At RPC Version Object Version Cluster

cinder-scheduler firefly nova enabled :-) 2021–02–04 21:52:54 3.12 1.38

cinder-volume firefly@lvm nova enabled :-) 2021–02–04 21:52:55 3.16 1.38

cinder-backup firefly nova enabled :-) 2021–02–04 21:52:55 2.2 1.38

cinder-volume firefly@heartofgold nova enabled :-) 2021–02–04 21:52:54 3.16 1.38Keep an eye on the cinder volume log. You may find that the service is initially listed as healthy. If there is something wrong with your configuration the driver may fail to initialize. The service will be listed as down a few minutes after restarting. It’s worth tailing this log while launching your first instance.

[root@firefly ~(keystone_admin)]# tail -f /var/log/cinder/volume.logOpen Horizon and navigate to Admin > Volume > Volume Types. Click “Create Volume Type”.

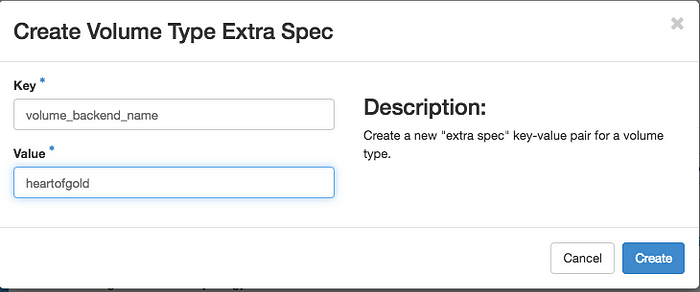

Next to your new volume type click Actions > View Extra Specs > Create.

Under Key enter volume_backend_name. For Value enter the name of your NAS and click create.

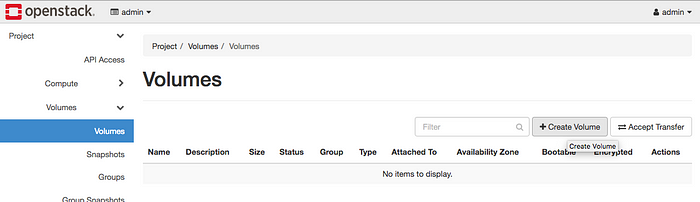

At this point your configuration is complete. Navigate to Project > Volumes > Volumes and click Create Volume.

Give the volume a name. Set the size of the volume. You should now see your volume listed under Type. Click Create Volume.

Go to your Synology and open the ISCSI Manager. You should see a healthy LUN created.

From here you can go ahead and launch an instance as normal. A LUN will be created and as well as a target.

Live migration woes.

In my OpenStack setup I have 2 compute nodes. I was hoping to be able to be able to perform live migrations between them. Initially I was having trouble getting it to work at all. From the nova logs I was getting an error about the SSH key located in /etc/nova/migration. The error said that the key was the incorrect format. I deleted it and generated a new SSH key.

I am now able to migrate instances. However the target on the Synology is deleted in the process. The LUN stays intact but the instance is unable to access it. I may be missing something obvious and will need to revisit this at some point.